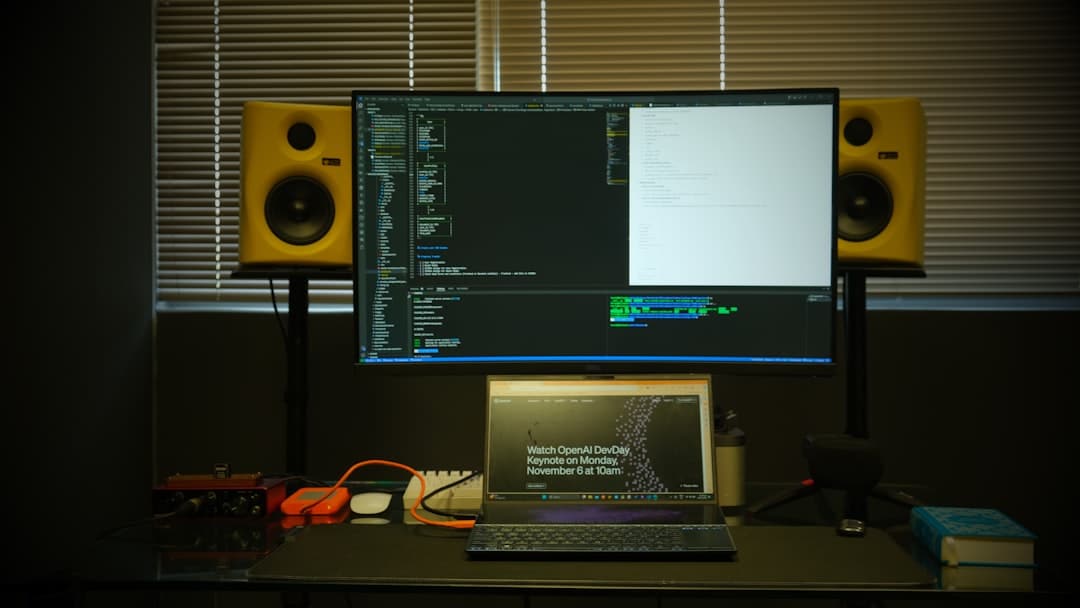

How to Connect LM Studio to a Remote Server

Whether you’re working on AI development, managing machine learning workflows, or simply exploring what local large language models can do, connecting LM Studio to a remote server opens up a world of powerful capabilities. With the server handling the heavy lifting and your local instance acting as a client, you can offload resource-intensive tasks and enjoy a smoother experience regardless of your hardware limitations.

TL;DR: Connecting LM Studio to a remote server allows you to run large language models on powerful cloud or remote machines while still interacting locally. You’ll need access to a remote server with the necessary software and ports configured, and you’ll need to tell LM Studio how to connect to that environment. It’s a matter of setting up SSH access, remote model inference services, and configuring LM Studio to route requests properly. Proper security and network configuration are essential to make this process reliable and safe.

Why Connect LM Studio to a Remote Server?

Local hardware often struggles with modern large language models due to high memory and processing requirements. By leveraging a remote server, especially one equipped with high-end GPUs, you can:

- Run more complex models: Work with 13B, 30B, or larger models without memory issues.

- Improve performance: Speed up inference and training time with high-speed CPUs and GPUs.

- Free up local resources: Continue using your local system for other tasks while the remote server handles the computation.

Prerequisites

Before getting into the steps, make sure you have the following in place:

- Remote server access: An SSH-accessible server with Python, required ML libraries, and the model you want to use.

- Compatible model: The model should be one that LM Studio supports or can interact with over an inference API.

- LM Studio installed: You should already have LM Studio running locally on your machine.

Now let’s break down the actual setup process into manageable parts.

Step 1: Configure Your Remote Server

The first step is making sure your remote machine is ready to take requests.

- Install necessary packages: Ensure you have Python, PyTorch, HuggingFace Transformers, and any dependencies needed for model inference.

- Load your model: Using Hugging Face APIs, load the desired pretrained model and prepare it to handle inference calls.

- Expose the API: You’ll need an API server to accept requests from your local LM Studio. The FastAPI or Flask frameworks are good choices to wrap your model logic in a simple RESTful or WebSocket interface.

from fastapi import FastAPI

from transformers import pipeline

app = FastAPI()

generator = pipeline("text-generation", model="gpt2")

@app.post("/generate")

def generate(prompt: str):

return generator(prompt, max_length=100)

Once your server script is working, you’ll want to run it using a production-ready environment like Uvicorn or Gunicorn and host it on a port accessible from your local network or VPN connection.

Step 2: Secure the Connection

Security is important when exposing your local system to external servers. Consider the following precautions:

- Use SSH tunnels: Tunneling your traffic through SSH can prevent unauthorized access to the exposed API on the remote server.

- Enable firewalls: Restrict the server’s open ports to only allow known, trusted IPs.

- SSL/TLS encryption: If you’re using HTTP APIs over public networks, consider securing endpoints with HTTPS using certificates from Let’s Encrypt or similar providers.

Example SSH tunnel command:

ssh -L 8000:localhost:8000 user@remote-server.comThis command forwards your local port 8000 to the server’s port 8000 securely over SSH.

Step 3: Connect LM Studio to the Server

LM Studio is designed to work with local and remote inference APIs. Here’s how to configure it to talk to a custom endpoint:

- Open LM Studio and go to the model selection interface.

- Select Custom API or Remote Server option depending on your version.

- Enter the endpoint address, which should look like

http://localhost:8000/generateif tunneling is set up. - Map the input and output parameters as needed. Make sure LM Studio knows which JSON fields contain the prompt and the reply.

Test the setup with a small prompt to make sure the response comes from the remote machine. Monitor CPU/GPU usage on the remote server to confirm that it is doing the heavy lifting.

Bonus: Automation and Optimization

Once everything is connected and functioning, you can improve your workflow with these extras:

- Auto-start scripts: Set up your remote model server to launch automatically on boot using systemd or pm2.

- Load balancing: If working in a team, allow multiple users to connect by implementing basic load balancing using Nginx or HAProxy.

- Scripting interface: Use LM Studio’s command-line interface to script prompts and capture responses, enabling batch processing.

Troubleshooting Common Issues

If things don’t work as expected, here are a few common issues and how to fix them:

- Timeouts: Check if your server is actually reachable and the correct port is open.

- Wrong format: Make sure your server returns output in the format that LM Studio expects—typically JSON with a “text” field.

- Performance issues: You may need to lower model complexity or increase GPU capacity if the response is too slow.

Future Improvements

This method is flexible and scalable. In the future, LM Studio may include built-in integrations with popular hosting platforms or native support for containerized deployments. Until then, custom setups like the one described here remain the most effective way to connect local interfaces with powerful remote backend processing.

Conclusion

Connecting LM Studio to a remote server can supercharge your AI development process by combining the accessibility of local UI with the computational power of cloud or networked infrastructure. With careful setup and secure practices, you can run state-of-the-art models efficiently, even if your local machine is underpowered. Whether you’re a solo developer tinkering on projects or a part of a research team, this approach unlocks new possibilities for creativity and performance.