LM Studio Not Loading Some Models? Here’s How to Fix It

So, you’ve just downloaded LM Studio, excited to chat with an AI model—or three—and… whoops! Some of your models won’t load. Whether it’s an error message, or just radio silence when you try to fire up a model, don’t worry. You’re not alone.

TL;DR

Some models just won’t load in LM Studio due to format issues, system compatibility, or simple user error. Try checking model files, updating LM Studio, and confirming your system meets requirements. Still stuck? You might need to tweak a setting or two. Let’s dive into it step-by-step.

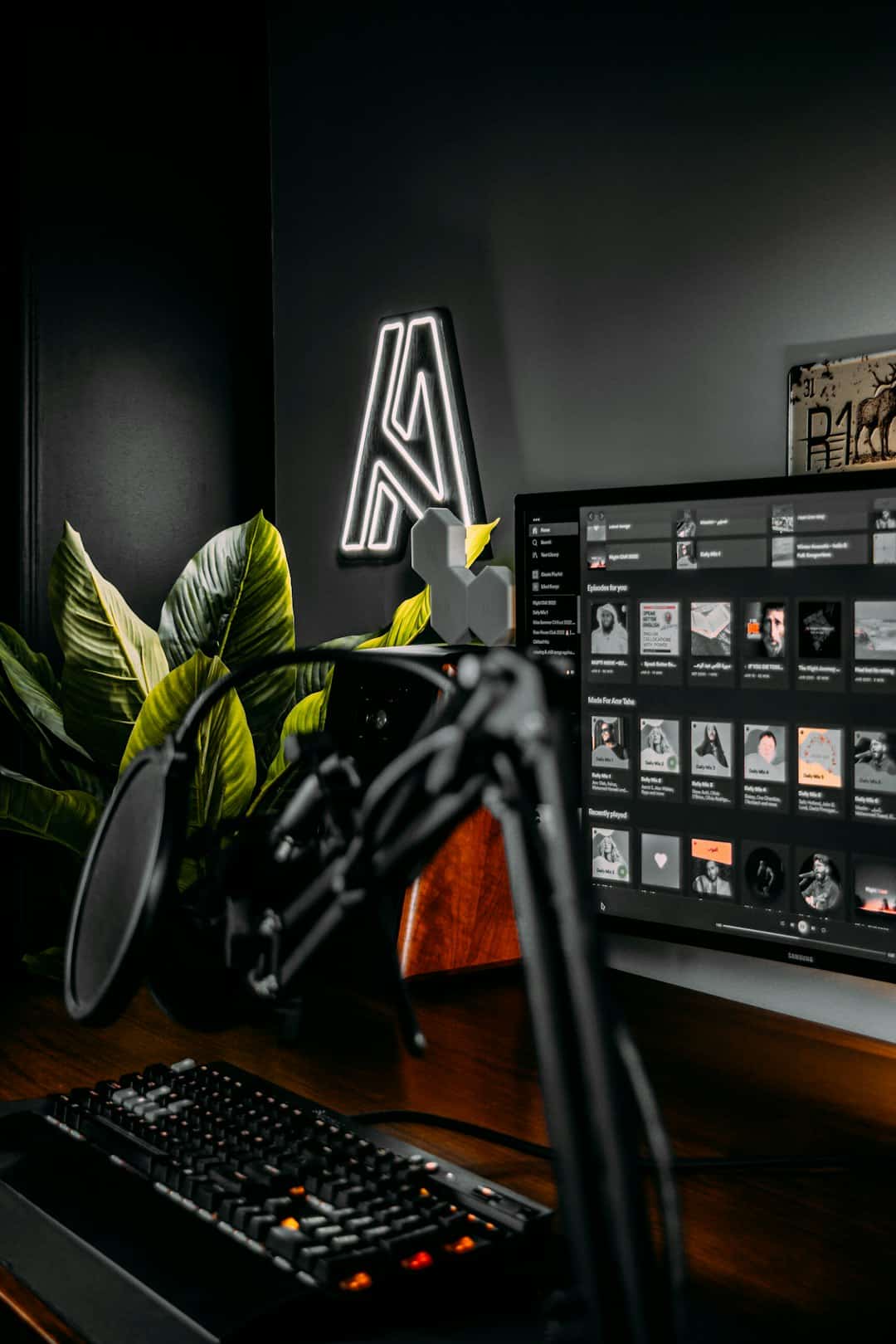

First, What’s LM Studio?

LM Studio is like the Swiss Army knife of local AI model hosting. It helps you run models on your computer without needing cloud access. Super handy. But just like any tool, it has its quirks—especially when dealing with more complex or larger models.

Common Reasons Models Don’t Load

Let’s break down the usual suspects:

- Wrong format: LM Studio supports specific model formats.

- GPU overload: Some models are too heavy for your hardware.

- Corrupted downloads: A bad download can stop things cold.

- Incorrect settings: Maybe it’s trying to open with the wrong backend.

- Version mismatch: Your LM Studio or model files might be outdated.

Good news? All of these are fixable!

1. Check the Model Format

LM Studio mostly supports GGUF and GGML formats. If you’ve got a model in a format like Safetensors or PyTorch, it won’t work—at least not out of the box.

Fix: Make sure you’re using a compatible .gguf or .ggml model. You can find these specifically formatted models on sites like Hugging Face by filtering by format.

2. Check Model File Names

LM Studio can sometimes be picky with file names. If a model file has long or unusual names, LM Studio might fail silently.

Fix: Rename your model to something simple, like:

mistral-7b.Q4_K_M.gguf

Shorter names help avoid path or recognition issues.

3. Download Corruption

Yeah, it happens. Large files over 4GB are especially prone to problems if your internet flakes during download.

Fix: If the model doesn’t load AND the file size looks too small, download it again. You can also compare the file size with what’s listed on Hugging Face to check if it’s complete.

4. Update LM Studio

This one’s easy to miss. If you’re using an older version, there may be a bug preventing newer models from loading.

Fix: Go to the official LM Studio page and download the latest version. Updates often include better model support and bug fixes.

5. GPU Not Powerful Enough

Some models—especially larger ones like LLaMA 2 or Mixtral—need a strong GPU. Think 8GB VRAM or higher, ideally.

Symptoms of this:

- Silent crash

- Black or blank output

- Stuck loading screen

Fix:

- Try a smaller model variant (e.g., a 3B model instead of 7B).

- Use a quantized version. “Q4” or lower helps save memory.

- Switch from GPU to CPU if needed (though it’s slower).

6. Missing Model Files

Some models come as a pair or group of files. If you’re missing even one, things break.

Fix: Check the model page (like on Hugging Face) and make sure you’ve downloaded ALL necessary files—this might include:

- .gguf or .ggml file

- Tokenizer file (.json or .model)

- Parameter/config files

Put all these files in the same folder. LM Studio needs the complete package!

7. Check Your Logs

LM Studio has logs that often scream the answer—if only you’d look!

Fix: At the bottom of the LM Studio interface, click on the ‘Console’ tab or ‘View Logs’. Look for clear error messages like:

Invalid model format

Failed to allocate memory

These tell you exactly what’s wrong.

8. Backend Problem

LM Studio can use different “engines” (aka backends) to run models, like llama.cpp. If it switches to the wrong one, the model might not start at all.

Fix: In the settings, verify that your selected model backend matches the file format and your system capabilities. llama.cpp is a great default for GGUF files.

9. Re-import the Model

Sometimes, things just bug out.

Fix: Delete the model from LM Studio’s list, then re-import it using the ‘Add Model’ button. This refresh can do wonders.

10. Still Not Working? Try Another Model

It could be that the model file is just badly made or not LM Studio-compatible—even if it’s in GGUF format.

Fix: Try a similar model from a trusted uploader. Some developers test for LM Studio compatibility before uploading. Check the comments or README for tips.

Bonus Tips

- Use compression wisely: Compressing files saves space but can break LM Studio’s ability to read them.

- No spaces in paths: LM Studio doesn’t always like folders with spaces in the name (like “My AI Models”).

- Stick to local paths: Avoid using network drives or cloud folders like Dropbox or OneDrive.

Final Thoughts

Troubleshooting LM Studio can feel like taming a digital dragon. But once you understand its preferences—file formats, downloading cleanly, and GPU memory—you’ll make it sing.

Remember, not all models are created equal. Some just don’t play well with LM Studio, and that’s okay. Try another, learn what works for your setup, and keep experimenting!

Now go forth and load your models with confidence!